Dawkins describes his algorithm in the following way:

It again begins by choosing a random sequence of 28 letters, just as before:

WDLTMNLT DTJBKWIRZREZLMQCO P

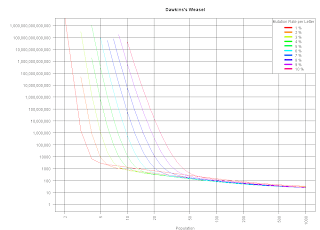

It now 'breeds from' this random phrase. It duplicates it repeatedly, but with a certain chance of random error - 'mutation' - in the copying. The computer examines the mutant nonsense phrases, the 'progeny' of the original phrase, and chooses the one which, however slightly, most resembles the target phrase, METHINKS IT IS LIKE A WEASEL. [...] the procedure is repeated.

In my previous posts, I explained how this can be transformed into a program:

- chose random string

- copy string n times with mutation. NOTE: at this step you don't know which letters are correct in place, so no letter is safe from being mutated!

- chose best fitting string. NOTE: best fitting seems to be generally understood to be the string with the most correct letters, the fitting is expressed by a number between 0 and 28

- Stop, if the number of correct letters is 28, otherwise

- goto 2

- chose random string

- copy string n times with mutation.

- ask oracle which string it likes best

- Stop, if the number of correct letters is 28, otherwise

- goto 2

Evaluating a fitness function for an algorithm is like questioning an oracle. Even Robert Marks's and Richard Dembski's web site says so - though they don't think it through.

So, how does the oracle work in Dawkins's case? You present it with a list of strings and it just tells you which string is best ("The computer examines the mutant nonsense phrases, the 'progeny' of the original phrase, and chooses the one which, however slightly, most resembles the target phrase, METHINKS IT IS LIKE A WEASEL."). You don't have to know how the oracle deems one string better than the other: it could be that it prefers the string with the most letters correctly in place, or the one which shares the longest substring with its (hidden) target, or perhaps it looks how many letters are right from the begin of the phrase, preferring METHINKS IT IS FROGLIKE XXX to BETHINKS IT IS LIKE A WEASEL, or preferring correct vowels to correct consonants, it could be a combination of these on Fridays, and Saturdays something else....

Could Dembski's algorithm with such an oracle? No. Here is a his algorithm:

- chose random string

- ask oracle which letters are in place

- if all letters are in place: STOP Otherwise:

- mutate letters which are not in place

- goto 1

What Dembski exploits is the knowledge about the inner processes of the oracle: but knowing how it works allows for a deterministic approach - the Hangman game - which terminates in a few steps...